- Big data analysis with apache spark how to#

- Big data analysis with apache spark series#

Graph data visualization tools include D3.js, Linkurious and GraphLab Canvas. Once we start storing connected data in a graph database and run analytics on the graph data, we need tools to visualize the patterns behind the relationships between the data entities.

There are also several different graph generators as discussed in Gelly framework documentation like Cycle Graph, Grid Graph, Hypercube Graph, Path Graph and Star Graph. In this article, we’ll focus on Spark GraphX for analyzing the graph data. These frameworks include Spark GraphX, Apache Flink's Gelly, and GraphLab. There are several frameworks that we can use for processing graph data and running predictive analytics on the data.

pre-processing of data (which includes loading, transformation, and filtering)Ī typical graph analytics tool should provide the flexibility to work with both graphs and collections so we can combine data analytics tasks like ETL, exploratory analysis, and iterative graph computation within a single system without having to use several different frameworks and tools. The data processing pipeline typically includes the following steps: Graph data processing mainly includes graph traversal to find specific nodes in the graph data set that match the specified patterns and then locate the associated nodes and relationships in the data so we can see the patterns of connections between different entities. Graph database products typically include a query language ( Cypher if you are using Neo4j as the database) to manage the graph data stored in the database. Graph databases are modeled based on what Jim Webber calls Query-driven Modeling which means the data model is open to domain experts rather than just database specialists and supports team collaboration for modeling and evolution. Graph data modeling effort includes defining the nodes (also known as vertices), relationships (also known as edges), and labels to those nodes and relationships. Graph database examples include Neo4j, DataStax Enterprise Graph, AllegroGraph, InfiniteGraph, and OrientDB. Without Graph databases, implementing a use case like finding common friends is an expensive query as described in this post using data from all the tables with complex joins and query criteria. The advantage of graph databases is to uncover patterns that are usually difficult to detect using traditional data models and analytics approaches. It's important to remember that the graph data we use in the real world applications is dynamic in nature and changes over time. Examples of these associations are “John is a friend of Mike” or “John read the book authored by Bob.” When working on graph data, we are interested in the entities and the connections between the entities.įor example, if we are working on a social network application, we would be interested in the details of a particular user (let’s say John) but we would also want to model, store and retrieve the associations between this user and other users in the network. Unlike traditional data models, data entities as well as the relationships between those entities are the core elements in graph data models. Let’s discuss these topics briefly to learn how they are different from each other and how they complement each other to help us develop a comprehensive graph based big data processing and analytics architecture. There are three different topics to cover when we discuss graph data related technologies: Big data analysis with apache spark how to#

In this final installment, we will focus on how to process graph data and Spark’s graph data analytics library called GraphX.įirst, let’s look at what graph data is and why it’s critical to process this type of data in enterprise big data applications.

Big data analysis with apache spark series#

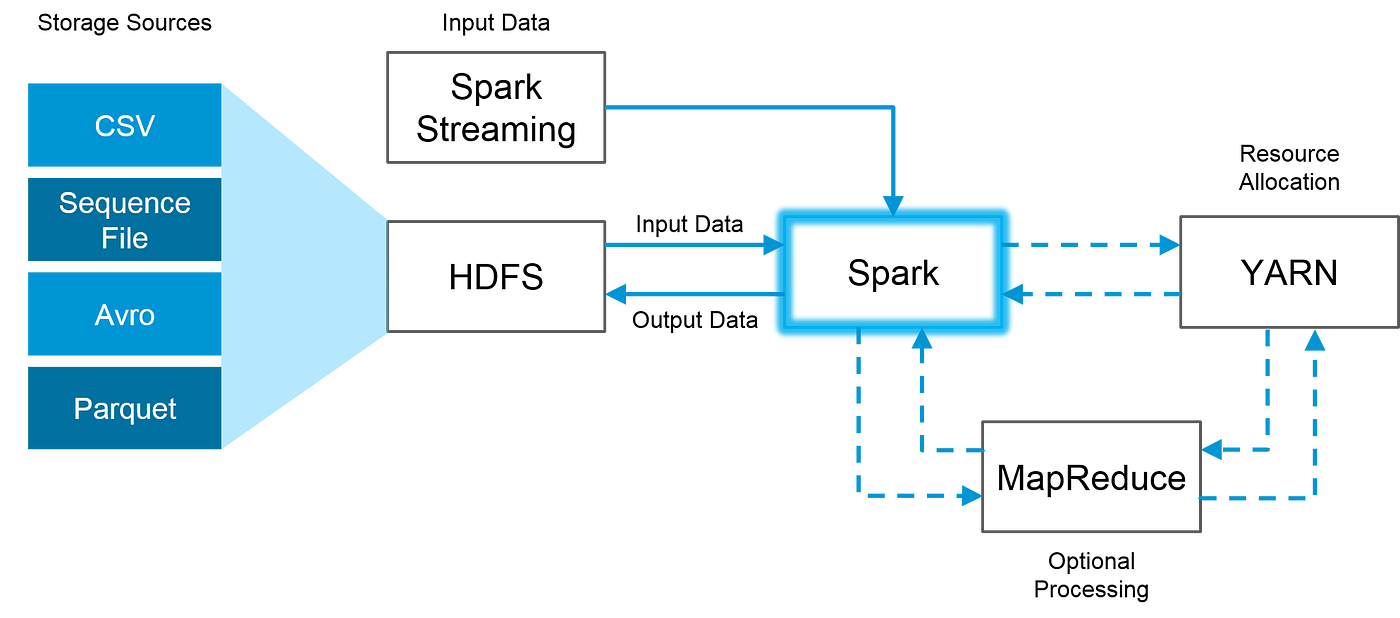

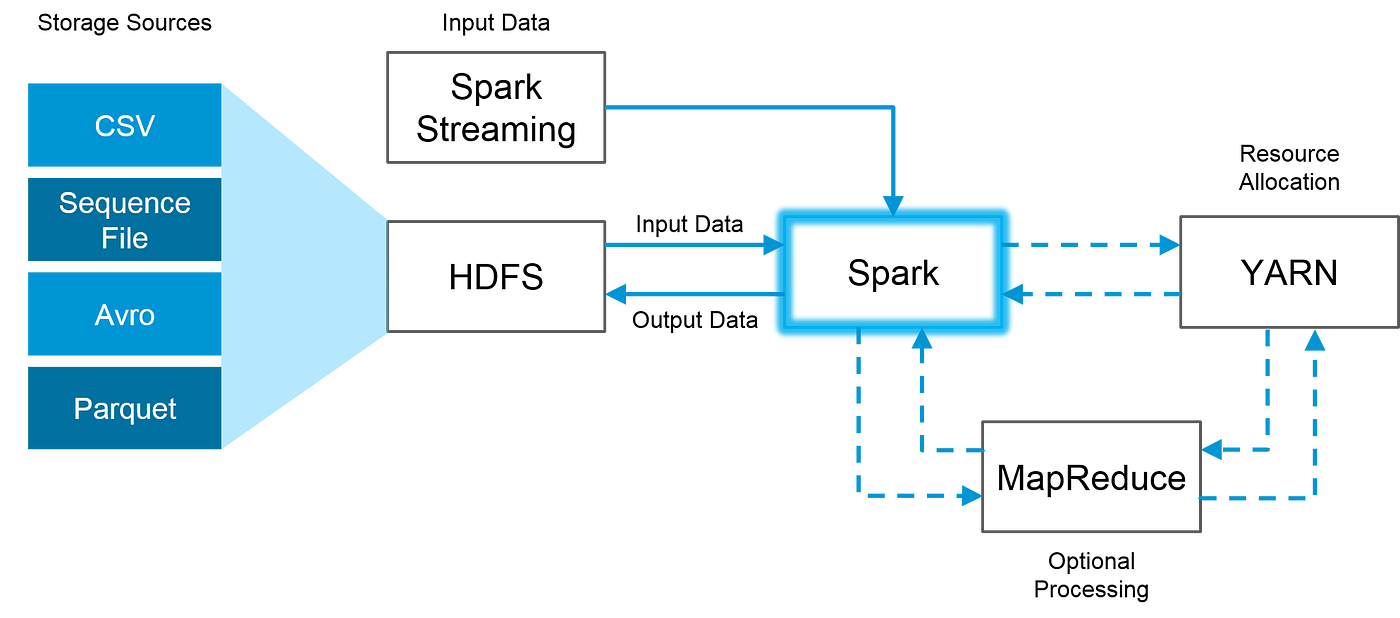

In the previous articles in this article series titled “Big Data Processing with Apache Spark”, we learned about the Apache Spark framework and its different libraries for big data processing starting with the first article on Spark Introduction ( Part 1), then we looked at the specific libraries like Spark SQL library ( Part 2), Spark Streaming ( Part 3), and both Machine Learning packages: Spark MLlib ( Part 4) and Spark ML ( Part 5). This type of data is called Graph data, and requires a different type of techniques and approaches to run analytics on this data, compared to traditional data processing. that need to be managed and processed as a single logical unit of data. For example, in a social media application, we have entities like Users, Articles, Likes etc. Sometimes the data we need to deal with is connected in nature. We have seen how Apache Spark can be used for processing batch (Spark Core) as well as real-time data (Spark Streaming). Achieve extreme scale with the lowest TCO. ScyllaDB is the database for data-intensive apps requiring high performance + low latency.

0 kommentar(er)

0 kommentar(er)